How GitHub Copilot + Playwright MCP Boosted Test Automation Efficiency by up to 37%

AI is reshaping test automation. Learn how GitHub Copilot and Playwright MCP helped our engineers accelerate test creation and boost overall efficiency.

Executive Summary

This case study explores how AI-assisted tools—specifically GitHub Copilot and Playwright MCP—can accelerate test automation within a custom Playwright Accelerator framework. Two engineers were tasked with implementing previously unseen test cases under both manual and AI-assisted conditions.

The results show that AI-assisted development consistently accelerated test creation, especially during the Script Creation phase where initial drafts and locators were generated in seconds. Across four test runs, time savings averaged 24.9%, with individual gains ranging from 12.8% to 36.2%.

Qualitative feedback confirmed that AI tools provided a valuable head start by generating coherent initial artifacts such as markdown-based test designs, Page Object Models, and locators. However, engineers noted that review and adaptation effort often increased because the AI struggled with framework-specific utilities and incorrect use of business logic abstractions requiring rework.

Key Finding: AI-assisted workflows reduced implementation time by an average of 25%, with the greatest gains seen in smaller, well-defined test cases.

Background & Objectives

Organisations leading in test automation and quality engineering are increasingly exploring AI-assisted developer tools to accelerate test creation, reduce routine effort, and improve productivity. This experiment assessed whether GitHub Copilot combined with Playwright MCP (Model Context Protocol) provided measurable efficiency gains when used inside a custom Playwright test automation framework.

Objective: Measure how much AI assistance reduces implementation time (in minutes) when engineers use Copilot and Playwright MCP for Page Object Model generation, code completion, refactoring, and debugging—all while maintaining identical quality.

Methodology

The study followed a controlled experiment design to isolate the effect of AI assistance on test implementation time.

Scope

Framework: TTC Global’s Test Automation Accelerator Framework: Custom-built with Playwright.

AI Tool: GitHub Copilot with Playwright MCP.

Participants: 2 engineers.

Test Artifacts: 2 new test cases, previously unseen by both engineers.

System-under-test: Workday HRIS

Exit Criteria (Quality Control)

All automated tests must meet the following conditions to ensure outcome quality is identical across conditions:

Pass consistently (robust execution without flakiness).

Conform to the established coding standards and framework conventions.

Meet the same acceptance criteria regardless of whether AI assistance is used.

Only efficiency (time to completion) is measured for each implementation task.

Data Collected

Engineer ID (anonymised)

Test Case ID

Condition (AI vs No-AI)

Time to completion for:

Test Case Analysis

Script Creation

Gap/Review/Update

Code Merge

AI Prompts used

Observations, comments

Results

The table below compares manual and AI-assisted runs across two engineers and two test cases. Below are the measured times and aggregated observations. Times are reported as recorded in the experiment notes.

Timings

| Engineer | Pass | Condition | Test Case Analysis (min) | Script Creation (min) | Review/Update (min) | Code Merge (min) | Total (min) | Time saved with AI (min/%) |

| A | 1 | Manual | 15.0 | 45.0 | 15.0 | 9.6 | 84.6 | |

| A | 1 | AI-assisted | 15.0 | 9.6 | 19.8 | 9.6 | 54.0 | 30.6 min/36.2 % |

| B | 1 | Manual | 15.0 | 45.0 | 9.6 | 9.6 | 79.2 | |

| B | 1 | AI-assisted | 15.0 | 9.6 | 19.8 | 9.6 | 54.0 | 25.2 min/31.8 % |

| A | 2 | Manual | 30.0 | 74.4 | 36.0 | 9.6 | 150.0 | |

| A | 2 | AI-assisted | 30.0 | 18.0 | 73.2 | 9.6 | 130.8 | 19.2 min/12.8% |

| B | 2 | Manual | 40.2 | 150.0 | 9.6 | 9.6 | 209.4 | |

| B | 2 | AI-assisted | 40.2 | 19.8 | 100.2 | 9.6 | 169.8 | 39.6 min/18.9% |

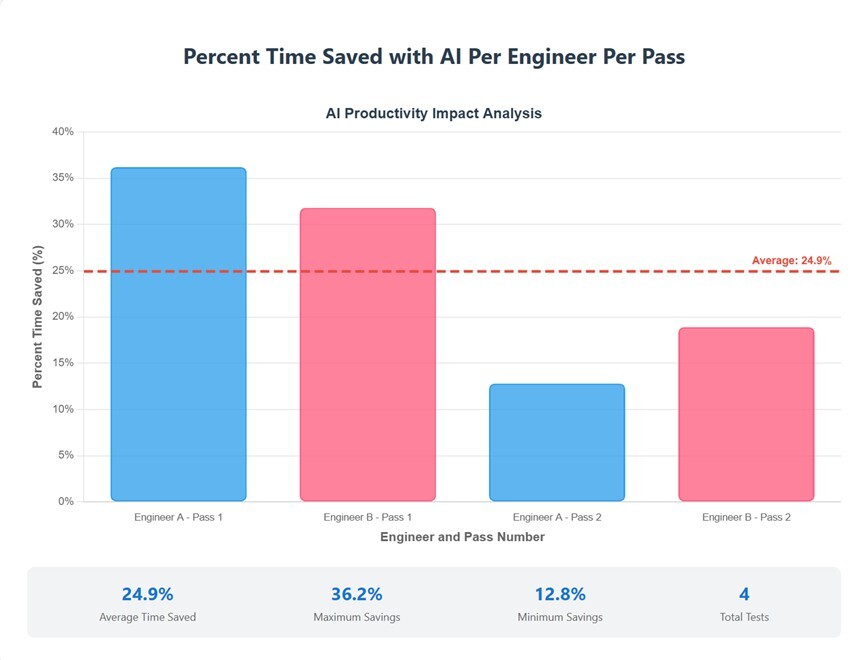

The chart below shows the percentage saved by adopting the AI-assisted workflow for each run.

These results demonstrate a consistent time reduction across all four runs, with the largest savings achieved during Script Creation.

Aggregate stats (4 runs):

Average percent saved: 24.9%.

Percent spread: 12.8% — 36.2% (range 23.4 percentage points).

Std. dev. (percent): 9.45 percentage points.

Qualitative Insights: What the Engineers Experienced

Common positive effects observed:

AI-assisted runs produced rapid initial drafts, including markdown-based test-design files with Page Object Model (POM) locators and step-by-step references.

Engineers reported the AI generated a consistent starting point and reduced the time spent on mechanical, repetitive coding tasks (notably initial script creation).

In some cases (Expense Report), the AI produced data model and json artifacts and created complex locators such as multi-select fields without explicit instructions.

Common limitations and challenges:

The AI often misapplied business logic abstractions, requiring manual rework to align with the team’s framework conventions. The AI could not reliably use framework-specific custom utilities (e.g., tables, strings, dates, or bespoke selector engines) without specific guidance.

Review/Update time sometimes increased after AI-assisted script creation because engineers needed to adapt generated code to internal utilities, conventions and edge-cases.

Small fixes or iterative adjustments were, in some cases, faster using other tools or manual edits than the default MCP flow.

Overall, the qualitative data reinforced that AI-assisted test automation is most valuable for rapid prototyping but still requires human oversight for framework alignment.

Summary

The experiment confirms that AI assistance using GitHub Copilot and Playwright MCP consistently reduces time during the Script Creation phase, especially by producing coherent initial artifacts such as markdown test design files and locators The overall time savings were meaningful in shorter, well-scoped test cases (e.g., Change Work Contact Information). For complex workflows, such as the Expense Report test case, benefits were still measurable but offset by the extra time needed to adapt AI-generated code to framework-specific utilities.

Practical implication: Teams adopting AI-assisted test generation should expect faster first drafts and lower initial coding effort, but should plan additional for time to review, adapt, and integrate generated code with team-specific utilities and patterns. Where the AI is given framework-aware prompts, the net benefit increases.

Conclusion

The study confirms that integrating GitHub Copilot and Playwright MCP can deliver efficiency gains of up to 36% - and an average of 25% - for test automation engineers working within custom frameworks. AI support is most effective in accelerating the early coding phases, providing faster first drafts and reducing routine effort. However, its effectiveness is moderated by the need for manual refinement when adapting AI-generated code to framework-specific utilities and conventions.

For organisations, the practical implication is clear: adopting AI-assisted test generation will increase automation engineer throughput and reduce routine coding time, but to maximise benefits, teams should:

Provide framework-aware prompts and fine-tuned guidance.

Allocate time for review and integration of AI-generated code.

Apply AI most effectively to smaller, well-scoped tasks where benefits are maximised.

Overall, this study demonstrates that AI-assisted tools can significantly enhance test automation efficiency without compromising quality, representing a compelling opportunity for organisations seeking to accelerate delivery.

Update from Mei Reyes-Tsai, GM – Innovation and Technology, NZ:

Since completing this experiment, Playwright has released new test agents (October 2025)—remarkably similar to the agents we developed during our research.This alignment with the latest innovations from the Playwright founders and industry experts is a strong validation of our approach and direction. It’s exciting to see our work reflected in the broader ecosystem!

We’ll soon publish a new round of experiments focused on Playwright’s native test agents—sharing insights as we continue to explore the future of AI-augmented test automation.

See it in action

If you are curious about this and want to see how this works, we will be conducting a webinar around this soon – so watch this space!

Curious for our prompts?

Would you like to see the prompts that we used during this experiment? Fill out this form and we will send these to you.

Read OUR OTHER Test Lab Blogs

We'll be publishing our R&D under Test Lab! Browse our other experiments below:

- Test Lab: TTC Global's Innovation Research Series

Can AI Agents Migrate Your Test Automation Framework Autonomously?

Appendix

These are the test cases automated in Workday for this experiment.

Test Case 1 — Change Work Contact Information

Navigated to dashboard (Home - Workday).

Clicked into the global search bar.

Searched for "Change My Contact Information".

Selected "Change My Work Contact Information" from suggested results.

Opened the workflow page.

Located the "Work" section in the contact information form.

Clicked "Edit Email" in the Work Email section.

Entered new work email address: new.email@workday.net.

Clicked "Save Email".

Clicked "Submit" in the action bar.

Observed confirmation dialog: "You have submitted".

Closed confirmation dialog.

Verified new email address is displayed in Work contact info (My Contact Information page).

Test Case 2 — Create Expense Report

Step 1: Navigate to Workday Dashboard

Open Workday and ensure you are logged in as Logan McNeil.

Step 2: Search and Start “Create Expense Report”

Use the “Search Workday” combobox to find “Create Expense.”

Select “Create Expense Report” from the results.

Step 3: Fill Expense Line Details

Go to the “Expense Lines” tab.

Click “Add” to create a new expense line.

Enter the following:

Expense Date: 09/18/2025

Expense Item: Office Supplies

Quantity: 1

Per Unit Amount: $24.00

Total Amount: $24.00

Currency: USD

Company: Global Modern Services, Inc. (USA)

Cost Center: 41600 HR Services

Region: Headquarters - Corporate

Additional Worktags: Location: San Francisco

Billable: Unchecked

Receipt Included: Unchecked (no receipt attached)

Step 4: Submit Expense Report

Click the “Submit” button.

Confirm that no receipt is required for expenses under $25.

Ensure all required fields are filled; no validation errors for missing receipt.

Step 5: Verify Submission

Observe the “You have submitted” confirmation.

Confirm the expense report number, date, and total are displayed:

Expense Report: EXP-00011153

Date: 09/18/2025

Total: $24.00

“Process Successfully Completed” is shown.

Step 6: View Details

Click “View Details” to review the submitted expense report.

Step 7: Complete Workflow

Click “Done” to return to the Workday home page.