Rethinking the Test Automation Pyramid

I was recently at the TestingMind conference in Houston and one of the speakers said something that was somewhat provocative. The speaker said that many organisations have an inverted testing pyramid, and that is OK.

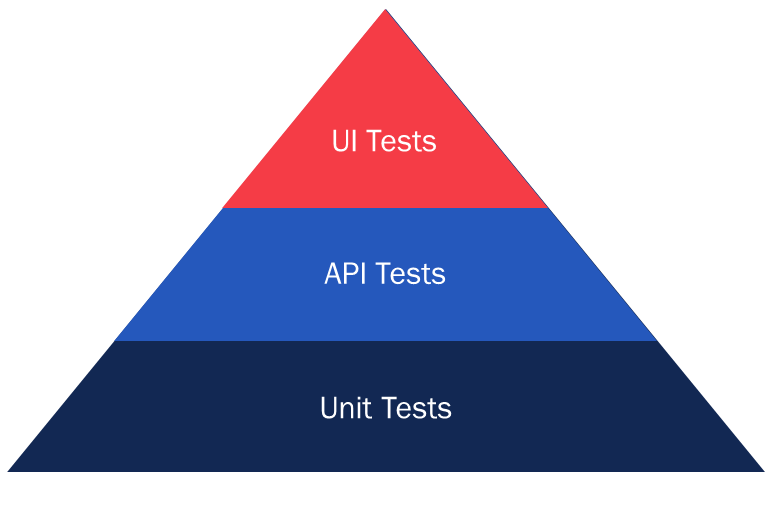

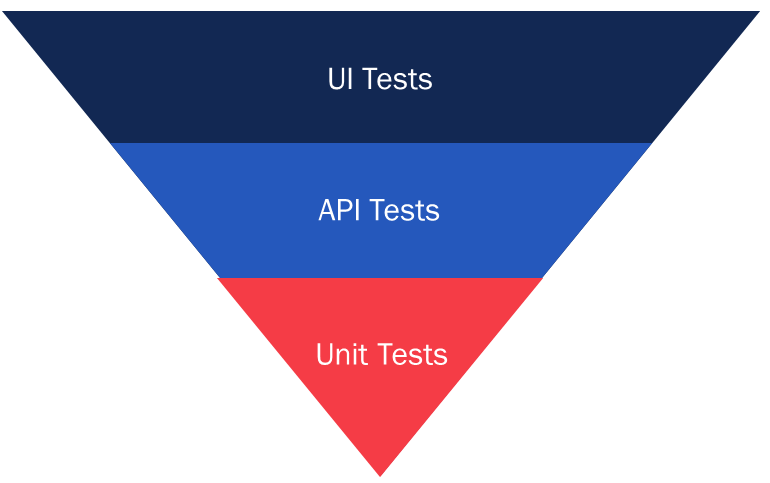

First, let’s look at the Test Automation pyramid. It is now an accepted wisdom that we should have more Test Automation at unit level, less Application Programming Interface (API) and the least at the User Interface (UI) level. We should aim to have most of our tests or checks automated, and this leaves the remainder of our tests to be conducted in a primarily exploratory manner.

This is visualized as a pyramid like so:

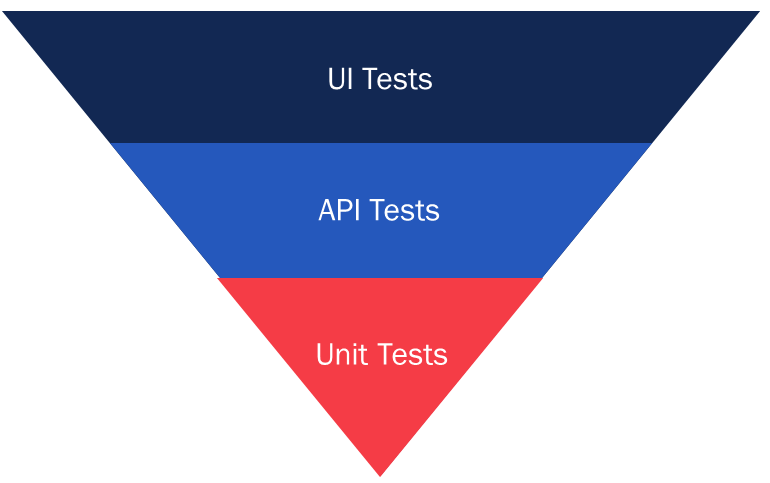

The accepted wisdom is that this pyramid is in contrast to the anti-pattern at most organisations where the majority of testing is done at UI level, with some done at API and very little or even none done at unit level.

This is visualized as an inverted pyramid like so:

I am the first to admit that the Test Automation pyramid is an idealized scenario and that its aim is to give tools to testers and developers to help their organisations shift their focus. Its goal is not to be prescriptive about any “magic ratio” between UI, API and unit tests.

Now, let’s go back to the speaker’s claim and break it down into two parts. “Many organisations have an inverted testing pyramid”. Yes, I agree with this. “And that is OK”. In some contexts, maybe, but in general I disagree, and here’s why.

In ideal test should be:

- Comprehensive: Provide a high level of coverage of your system under test.

- Reproducible: Flaky tests create significant noise and over time desensitize team members to the test results.

- Transparent: Failure messages point directly to the root cause, which enables easier root cause analysis and resolution.

- Atomic: The test is highly granular with minimal dependencies, allowing it to be run on demand.

- Focused: There is a clear test intent that is easily understandable.

- Fast: Fast feedback allows developers to be more productive.

All things being equal, unit tests typically achieve these goals better than API or UI tests.

Whenever I have seen organisations with anything more than a token investment in proper code level unit tests, the number of unit tests invariably outnumbers the number of automated UI tests. This is because unit tests are much quicker to develop. So, you will very likely have more of them!

Additionally, if you are using Test Driven Development/Design (TDD), which I highly recommend, these unit tests are implemented during development. Red, Green, Refactor anyone? TDD not only helps you ensure that unit tests are actually implemented, but more importantly it assists you in writing testable code, which typically helps to ensure that your code is “better code”. This is in contrast to UI Test Automation where there is a larger delay between development and implementing Test Automation.

I began to do some thought experiments.

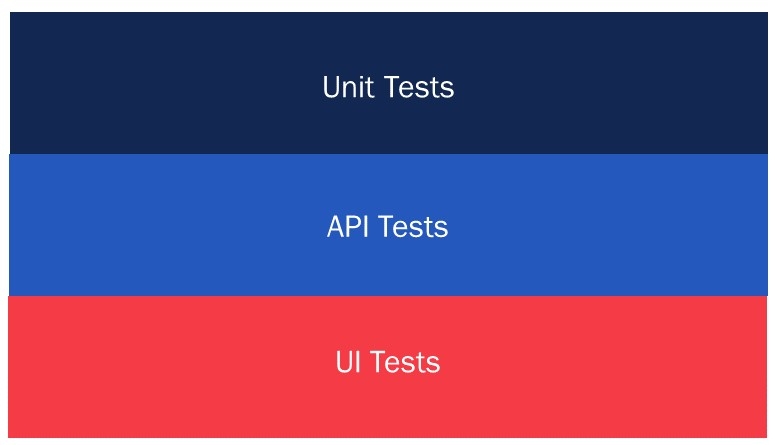

First of all, let’s assume that the distribution of effort between different testing categories is even.

That would be visualized as a pillar like so:

Note: When Test Automation is done by a separate team, as can often be the case for UI and API Test Automation, it is easy to measure how much effort is spent there. But when it is done as part of development, as is the case with Test Driven or Behavior Driven Development, it is harder to measure.

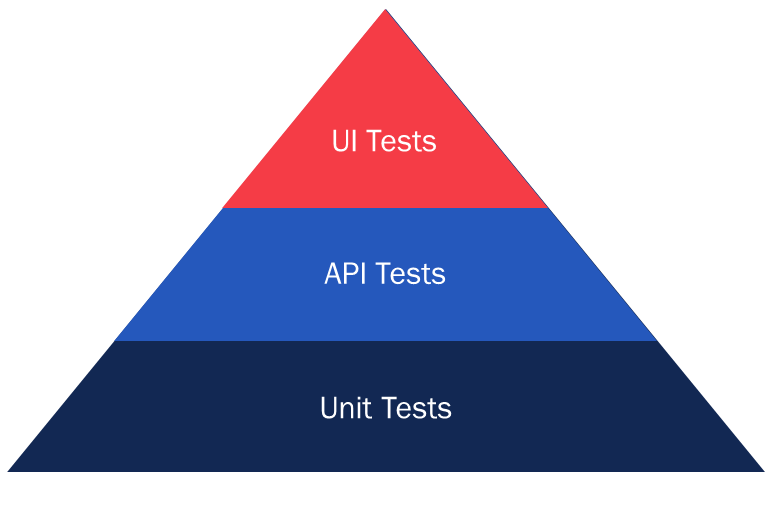

Now, let’s assume that we have invested 100 hours into each category and let’s estimate how productive we are with the different Test Automation categories.

If UI Test Automation takes us two hours to develop each automated test case, we would have developed 50 test cases.

If for API Test Automation we can develop two automated test cases per hour, then we would have developed 200 test cases.

If for unit Test Automation we can develop five automated test cases per hour, then we would have developed 500 test cases.

Note: These productivity numbers vary greatly by complexity, technology, skill etc and should be treated purely is illustrative. However, these numbers are in line with what I have seen anecdotally.

With these numbers plugged in, we see that an equal allocation of effort will result in a pyramid-shaped distribution of automated test case numbers like below.

But there is another aspect that we may be referring to with the Test Automation Pyramid, and that is execution time.

Let’s make some more assumptions.

If our 50 automated UI test cases take on average 180 seconds (3 minutes) to run, our total serial run time will be approximately 150 minutes.

If our 200 automated API test cases take on average 10 seconds to run, our total serial run time will be approximately 33 minutes.

If our 50 automated Unit test cases take on average 0.5 seconds to run, our total serial run time will be approximately 25 seconds.

Therefore, an even distribution of effort and a conventional pyramid shaped distribution of automated test cases will result in an inverted pyramid for test execution time.

For me, these numbers tell an interesting story. The first is that we need to be very clear that the Test Automation Pyramid is illustrative, not prescriptive. The second is that we also need to be very clear what, if any measurements the pyramid is representing. Is it effort, number of test cases, execution time, coverage or some other metric? Finally, I think it also shows that organisations that are investing in unit testing should have a proportionately high amount of unit tests when compared to UI and API tests.

There was another perspective discussed, which was that UI Test Automation is often the pragmatic approach to be used first when you either don’t have access to the code or when architecture isn’t suitable for APIs. This approach has merit in the case of Commercial Off The Shelf (COTS) software where you often don’t have access to the source code, or there are proprietary APIs that are difficult to test. The approach also has merit in the case of legacy software, which is architected in a monolithic fashion and may not be written in a language that has strong tool support for unit testing. In both cases I see this as a limitation of the technology stack and/or architecture of the system. I don’t see it as a desirable approach to Test Automation.

Overall, the Test Automation pyramid encourages the correct behavior - to shift the focus of Test Automation away from the UI layer, which is slow, brittle and costly. However, this does not mean you need to charge thoughtlessly into creating huge numbers of unit tests to balance out your large UI Test Automation suite. We should be looking to understand the intent of our tests and attempt to implement the test at the most granular, or atomic, level possible. This shift is not straightforward and it requires a collaborative approach between developers and testers, but it will pay dividends for those who follow it.

Interesting articles on the Test Automation Pyramid

https://martinfowler.com/bliki/TestPyramid.html

https://testing.googleblog.com/2015/04/just-say-no-to-more-end-to-end-tests.html

https://martinfowler.com/articles/practical-test-pyramid.html

https://www.joecolantonio.com/why-the-testing-pyramid-is-misleading-think-scales/